On The Brink Of A Speech Revolution

How we interact with technology is changing fast - and voice recognition is a major driving force.

Speech recognition technology has been around for decades but has only recently become useable by the masses as the power of GPUs has increased. Broadly speaking, there are two types of speech recognition: voice interaction/command and continuous speech recognition.

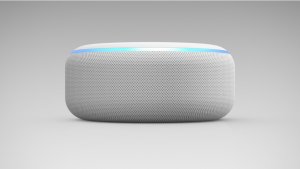

Most people are familiar with voice interaction, as this technology is used in Apple’s Siri or Amazon’s Alexa. This technology recognises keywords or phrases that then trigger an action via natural language processing (NLP) techniques, such as “Siri, where is the nearest coffee shop?”.

However, the so-called “phrase spotting” approach doesn’t work when it comes to continuous speech recognition. The system simply doesn’t know what the speaker is going to say next when transcribing telephone calls, providing TV subtitles or performing email dictation.

Continuous speech recognition has seen some dramatic improvements over the past few years. Sadly, recent claims regarding an accuracy that is better than that of a human are setting unrealistic expectations in the market.

These systems are often academic, running many times slower but with very high memory footprints compared to real-time systems, which enables them to achieve high accuracy levels but limits their commercial application.

The availability of open source toolkits such as KALDI and the progress in accuracy have led to several new vertical companies developing speech analytics applications. For example, Gridspace and Chorus mainly focus on the contact centre space and are going up against Nuance, an established speech company.

In other territories, iFlyTek dominates the Chinese speech market, with circa $12bn market cap, making it the largest speech company in the world. Baidu is also active but the direction in which they will go remains to be seen, especially following Andrew Ng’s departure.

Last but not least are the tech giants, Google, IBM and Microsoft, who all offer cloud-based speech services and typically focus on short-form audio consisting of a few words. Google recently expanded their service to 69 distinct languages (130 with dialects), vastly outperforming IBM (7) and Microsoft (6).

With all three, users send their data to the respective cloud service, making it only suitable for use cases where data privacy or volume are not an issue.

What sectors are likely to be disrupted next

Much of the current hype is around virtual personal assistants, or VPAs, such as Alexa and Siri.

However, what will truly disrupt the consumer market is conversational speech technology that adapts to the users and functions across different devices - for example, you could start dictating an email in the car and finish it at home whilst cooking. This functionality will also disrupt the in-car experience.

Another market in need of a refresh is subtitling. The ability for broadcasters to add new words to the dictionary will be critical. The need for this was recently highlighted by a mishap at the BBC, where Newcastle United were accidentally referred to as ‘black and white scum’ by the subtitling system.

Content discovery via media subtitling will lead to interesting new marketing and revenue approaches, especially as content on the internet and social media is increasingly consumed in audio and video formats.

The advent of MiFID 2, GDPR and a host of other financial market regulations is leading to an increase in adoption of speech technology. For instance, the technology soon will have the potential to monitor traders in real-time and provide immediate insight when trader mis-selling or PPI fraud occurs.

Real-time speech analytics have long been heralded as the holy grail of speech recognition, especially given the large volumes of call centre data. With many new companies now pushing into this space, it is possible that one of them will develop a system that can accurately predict customer churn or upsell opportunities.

In the long term, the medical market is set to be disrupted through new applications of speech technology, such as measuring progress of Alzheimer’s via vocabulary complexity in real-time and allowing physicians to adjust medication dosage, or measuring the emotional state of individuals via sentiment analysis and grammar evaluation, potentially supporting patients suffering with mental health problems.

Seamless voice integration

Seamless voice integration will depend on several factors, including good accuracy across use cases and being independent of dialect.

Extrapolating from current progress, it is possible that we will achieve this in the next 3-5 years. User personalisation of speech - generic systems adapting to the individual’s way of speaking and specific words being used - will become critical, as the last 5-10% accuracy is user specific.

The key to achieving seamless voice integration is to ensure cross device functionality. Apple do it well across their product suite, whereas Google are trying to achieve this through their Android Platform, and Amazon via selling Amazon Alexa at a loss and integrating it with third parties.

This involves several risks, such as dependency on one or two providers and data ownership issues.

From a device manufacturer’s point of view, the true value of speech technology lies within the data it generates, and in this model, it goes to either Google or Amazon. The applications also require a good data connection in order to perform well, which limits the ability to use voice technology in cars, rural areas or areas with poor internet connectivity.

With Microsoft now also pushing into the consumer voice space, the race is on for seamless voice integration, but until a non-data player emerges the battle will revolve around those who can collect the most useful consumer data.

Hurdles that stand in the way of a voice-driven society

For a voice-driven society to emerge, speech technology will have to address accuracy across use cases and languages as well ease of integration and operation, without limiting functionality and operational performance. It will also need to address potential user concerns around data privacy and ownership

There is no doubt that we’re heading for a speech revolution. This shift has a huge amount of potential to change how we interact with technology and others around us, breaking down the barriers of communication and uncovering new horizons.

Benedikt von Thungen, CEO, Speechmatics.

Thanks for signing up to Minutehack alerts.

Brilliant editorials heading your way soon.

Okay, Thanks!