The Best Protection From DeepFake Videos Is You

Deepfakes are a growing threat to business and democracy. But now you can fight back.

First, a video of US politician Nancy Pelosi apparently slurring. Now a disturbing clip of Mark Zuckerburg. The rise of convincing “deepfake videos” is creating a very real challenge for political candidates and media personalities today, as they face a world where they could theoretically be shown saying almost anything.

So, should we be worried about deepfake videos? How are they created? How do we spot the difference between real and fake videos? And what can be done about them?

Over the past few weeks, several pieces of research concerning a new approach to using artificial intelligence to create deepfake videos have been released that has many people worried about a near term future where nothing you see can be trusted.

Of course, this fear is understandable and the apparent ease with which the fake content can be created only makes the problem seem more real.

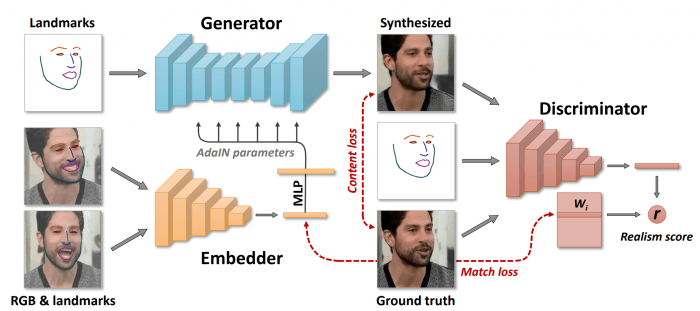

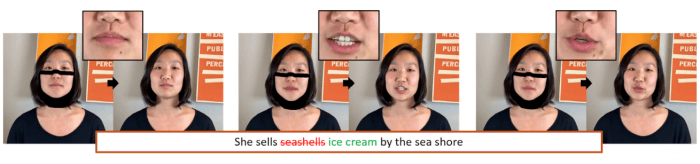

Until now, researchers would have required a large library of images or a long video of the individual person that was the target of the fake video in order to create new content but the new research provides a breakthrough in the form of a new approach to modelling facial expressions which allows a generic model of facial movements to be applied to a single image in order to create a relatively convincing facsimile of the target individual talking.

In simple terms, the researchers have created a library of facial movements (based on learning from the vast quantity of talking head videos available in the public domain) that can be applied to a single image of a face and synchronized with any speech.

Quite literally, the researcher can type in what they want the head to say, and the technology will make it look as if that individual had actually said those words.

It doesn’t take much to imagine the kind of havoc this technology could cause, especially at a time where the political environment on both sides of the pond is super-charged, but rather than jump on the panic bandwagon, it’s really important for us to take a step back and look at the reality of how this will likely play out.

The ability to create convincingly fake images or video is hardly something new to us. Hollywood has been making a pretty good living through creating believable illusions for decades and in a sense all we’re really talking about here is making some of that movie magic a little more accessible to people.

In the short term, while people remain ignorant to the existence of fake videos the danger is real, and could pose a significant threat to modern society unless we do a couple of things:

Firstly, we need to have this conversation, members of the public need to know how easy it is (and how it will be even easier in the future) to create convincing fake videos.

That will help to drive a debate, not as to whether the technology should be banned (it can’t) but that we need to do more than just take what we see and read for granted.

Secondly, we also need to remember the power of this technology works in both ways, increasingly the very technology that makes this capability possible can also be deployed to help separate truth from fiction.

YouTube’s Content ID system already employs a similar technology that checks for copyrighted content when videos are uploaded before publishing them.

Similarly, Microsoft has a technology called PhotoDNA for Video that although currently used as a tool for the prevention of on-line child exploitation, could be used to provide a “digital fingerprint” for authentic videos that would enable the easy identification of fake videos.

Additionally, even as I type this, computer scientists are turning the technology on itself with researchers at the USC Information Sciences Institute (USC ISI) announcing the creation of new software that uses AI to detect deepfake video with 96% accuracy.

Despite the advances in “anti deepfake” software that will inevitably arrive, it’s crucial we understand that we cannot leave it to the technologists alone to solve the problem.

The real issue that all the media panic about fake news and deep fake videos manages to completely miss is that now, more than ever, is the time for us to lean-in to critical thinking and to re-discover the joy and importance of good journalism.

With every single piece of content we consume, from whatever source and on whatever topic, we need to be asking ourselves whether we should believe the content to be true rather than simply assuming it is.

With every mouse click and finger swipe we should be thinking about the veracity of the content, our level of trust with the publisher or platform that provides it and, if necessary, doing some quick checks on line to see if it can be substantiated by other trusted means.

Deep fake videos are not going to go away, and in a sense that will be their downfall.

As soon as the pubic comes to expect the possibility and the technology becomes increasingly consumerised (I’m betting you’ll be downloading a “deepfake app” within the next 5 years that will provide hours of entertainment for you and your friends just as today’s crop of “face-swap” apps do) their potency will go the same way as moving images of you with cat ears and whiskers, funny the first time, mildly amusing the second, but obvious and boring from then on.

As with all disruptive technologies, the real power comes not from the technology itself, but the people who use it and the impact it has on those that consume the results from it.

We can’t control whether people direct the technology to do good or bad but we can help those that consume the results acquire the basic skills (like critical thinking) that will empower them to maximise the technology’s potential for good, while minimising the risk of the bad.

Dave Coplin is an established technology expert, speaker and author, providing strategic advice and guidance around the impact of technology on a modern society in order to help organisations and individuals embrace the full potential that technology has to offer - www.theenvisioners.com

Thanks for signing up to Minutehack alerts.

Brilliant editorials heading your way soon.

Okay, Thanks!