Artificial intelligence is all the rage. Software is updating at light speed and everyone's talking about what it will soon be able to achieve. But aren't we getting ahead of ourselves?

This year at SXSW, VR was everywhere but it felt like artificial intelligence was poised to have the biggest impact on our lives. From computers playing Go to autonomous cars, the conversation had shifted from possibility to reality.

On the last day, four experts including myself took part in a panel to discuss the way these algorithms can affect our free-will, our sense of self, and society as a whole.

On the panel we focused on wider social consequences of algorithmic services, particularly regarding race, gender, and financial inequalities. This article takes a slightly different perspective, focusing on what it means to each of us as individuals.

The argument is very simple: over the last five years intelligent products and services have inveigled themselves into all parts of our lives. From dating to deliveries, finance to fitness, start-ups have created algorithm powered services that solve our problems in ever more personalised and persuasive ways.

We need to think seriously about this. Under the cover of convenience, these services are changing the way we see the world and the way we think. They represent a profound challenge to our sense of who we are.

How much do we value our free will? How much are we prepared to sacrifice for convenience?

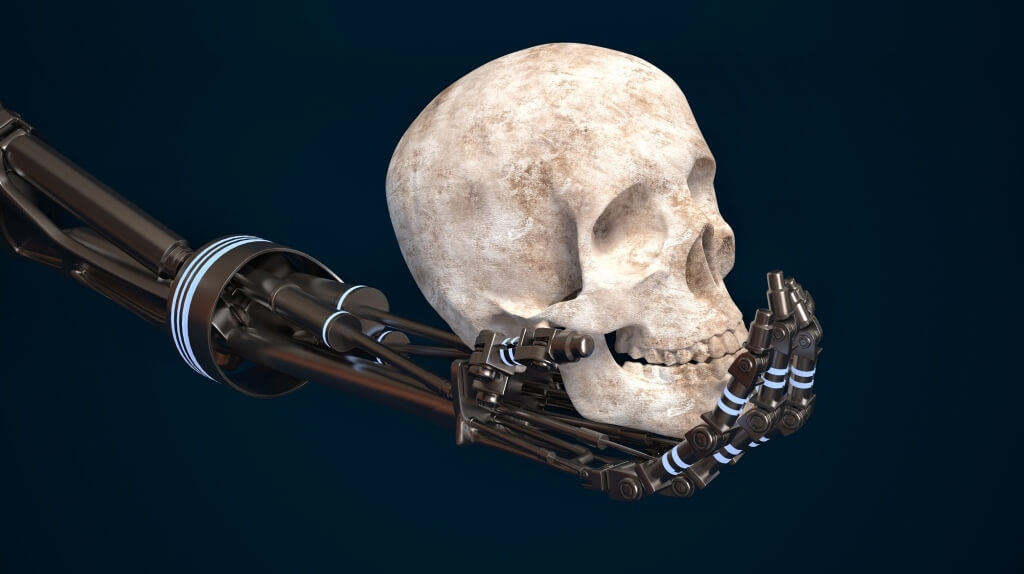

We should talk about this now while there is time to make these services work for us. If we don't, we risk reducing ourselves to being the meat extensions of their algorithms. And no one wants that sci-fi future.

Of course, products, services, and media have always changed the way we think. The concerns about video games and films are well known. The printing press caused some consternation when it "launched". Everything we design changes us. Even a humble hammer changes the way we think about things we might want to hit.

But intelligent services are different. They seem like tools but we cannot fully control them. We act through them but they act back. They have their own intelligence, which serves their own agenda as well as ours. And they get better and better at it all the time by continuously adapting, applying what they've learned from millions of other users. The more they learn, the more influence they gain.

This may all be a good thing. The services are good, they solve our problems. And as they get better they have the potential to bring out the best in us, or at least mitigate our failings. There are things we find genuinely difficult, like finance, or even keeping in touch with more than a few people. They can fix us, they can give us what ten years ago would have looked like superpowers.

But we should approach it critically. In this algorithmic future, what counts as better? And what else comes along with these new superpowers?

For example, being able to find and chat with people that share my views is pretty miraculous, especially having grown up in a small town, but what if that means I no longer see people that disagree with me? What if a financial service prioritises my long term interests but makes it harder for me to take the spontaneous decisions which are so much a part of who I am?

Are we happy to leave it to the (predominantly white, male, and wealthy) people that own these services to decide? Do we believe "the market" will take care of us?

The easy option is to dodge the question of what's right and focus on outcomes. Financial businesses judge themselves by whether they make their customers wealthier. Social networks by the level of social engagement. Dating businesses by number of (good) dates.

This is easy because it's aligned with the goals of the business. Also, practically, it gives a clear set of variables for an algorithm to optimise to. It also reflects scientific culture that many tech entrepreneurs grew up in.

Even leaving aside security concerns this approach should make us nervous.

First, is a question of basic ethics. Another word for "outcomes" is "ends", and we know that they do not justify means. Making someone richer is no good if, in doing so, you make some other aspect of their life, or someone else's life, worse in some unanticipated way.

Second, there is a risk we become dependent. What happens if these services are suddenly unavailable? Will we be helpless? Will we find we have outsourced too much of minds to Silicon Valley?

Third, at a more profound level, by focussing on outcomes, do we lose the intangible benefits of achieving those outcomes for ourselves? Perhaps we learn less. Perhaps we exert less willpower. Perhaps we no longer have to cooperate with others. These are all ways in which we learn about ourselves and, in a way, become ourselves.

There is, thankfully another way. What if, instead of designing with a single outcome in mind, our goal was to help people become more themselves?

We would probably try to encourage reflection and self-understanding. Prioritise teaching over telling or automation. Give people tools that augment their natural abilities rather than replacing them.

We might even spend more time thinking about the role of the service in society rather than focussing on what it does for an individual.

In short, by trying to do less, we could enable people to be far more. To borrow a buzz word we could call it mindful design.

This will not happen by accident, we will have to take deliberate steps. At the highest level, we need to put this on the political agenda. And from the bottom up we need to make sure we are educating people about these issues. That might mean, in school, instead of focussing on getting people to code, we encourage broader media literacy, which is really algorithm literacy.

This discussion should start soon. Politics is already starting to grapple with the implications of intelligent services on tax, job security and inequality. Now we need to find a way to talk about the implications they have for who we are and who we want to be.

James Buchanan, Strategy Director at Razorfish London.

Thanks for signing up to Minutehack alerts.

Brilliant editorials heading your way soon.

Okay, Thanks!